Super-intelligence? No, not even high school intelligence

A high school math project illustrates what's missing from AI

There are many claims that current GenAI models are as intelligent as Einstein, or at least high school students. For example, former Google executive Mo Gawdat has claimed that GPT-4 matches the IQ of Einstein, and we could be just a few months away (GPT-5) from a machine with 10 times the IQ of Einstein.

This article in Quanta Magazine and the source article on Arxiv, by Joshua Broden, Malors Espinosa, Noah Nazareth, Niko Voth puts the lie to those claims. High school students are smart in ways that current AI models cannot accomplish.

A team of high school students, coached by Espinosa, made new discoveries in the mathematics of topology, that also illustrates the kind of capabilities that an artificial general intelligence will need and of which today's models are incapable.

Gawdat chose to compare current AI models to Albert Einstein because Einstein is a kind of poster child for high intelligence. He won the Nobel prize in physics for his work on the photo-electric effect. He created something unanticipated at the time. He reconceptualized light as consisting of waves (which was widely known) and particles (which were not). The particle view of light was nowhere to be found in the published writing at the time. Einstein achieved an insight that changed how we thought of light and in doing so, solved a number of problems. He did not just parrot what others had written.

So far, science is done by thinking humans, and not so much by machines (but see this paper). Although its content is different from most people’s daily concerns, it has the advantage of being more explicit and its processes are well studied and well documented. That one of the favorite targets of AI promoters is Albert Einstein is just another reason to look at science and math as a model for understanding how intelligence works.

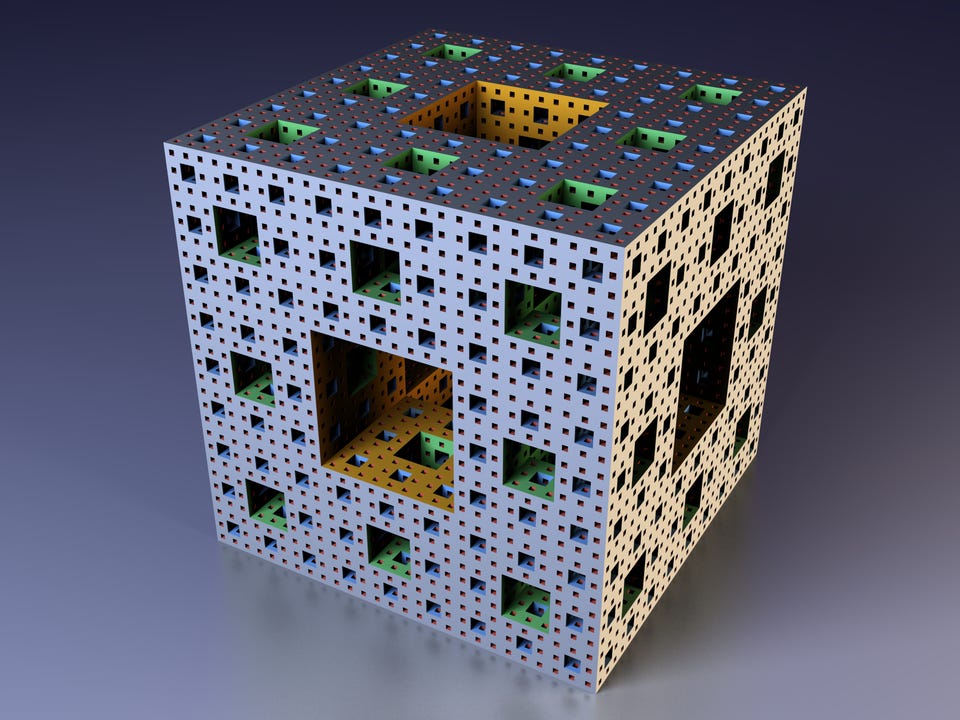

The target of the student research was a Menger sponge, which is a fractal object similar to a Rubik’s cube, from which cubelets have been removed, and mathematical knots.

This is how you construct a Menger sponge:

Start with a cube and divide into 27 smaller cubes (cubelets), so that it looks like a Rubik’s Cube.

Remove the smaller cube in the center of three-dimensional cube.

Remove the smaller cube in the center of each face. The result is a cube with holes that go through the center of each face and the center of the cube, leaving a total of 20 smaller cubes.

Divide each of the remaining 20 cubes into thirds along each face and repeat the process.

Continue to divide and remove cubelets ad infinitum.

A fascinating property of a Menger sponge is that as the divide-and-remove process is repeated, the sum of the cubelets’ volume decreases toward zero, while the sum of their surface area increases without bound. At the limit, the sponge is neither a solid nor a surface, but it is a curve.

Menger showed in 1926 that basic curves, such as circles, trees, and graphs, could be topologically embedded in the sponge, meaning essentially that one could draw any of these basic curves on the sponge without any part of the curve falling into or crossing a hole. The students knew of Menger’s proof that basic curves were topologically similar (homeomorphic) to the sponge, but it was unknown where other kinds of curves could also be inscribed.

The idea of matching curves to one another is a key part of topology. Topology is a branch of geometry that studies shapes that can stretched or shrunk and moved (“the study of geometric properties and spatial relations unaffected by the continuous change of shape or size of figures.”). In topology, the distance between points is not important, but the connections between points is. Two objects are equivalent if you can stretch or shift one of them and align the connections between points. A sphere and a cube are topologically equivalent, for example.

Menger sponges, knots, and topology are interesting in their own lights, but here I want to use them to illustrate what would be needed to achieve general intelligence. General intelligence is often defined as being able to solve many different problems, but it may be more useful to define it as being able to solve many different kinds of problems or being able to solve entire problems from identification to solution.

In answering their knot question, the students engaged in intelligence processes that I believe cannot be engaged by a language model. An artificial general intelligence will need, among other things, to be able to engage the same kinds of thought processes with other problems as exhibited by the students in solving this specific problem of whether knots can be inscribed in a Menger sponge.

The students defined the problem as topological

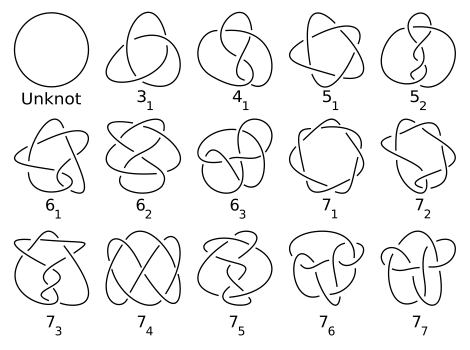

The students took on the problem of proving whether a topological knot could be embedded in the sponge. A knot is a string that has been twisted and which the two ends are joined to form a loop. Mathematical knots are 3 dimensional. When one part of the knot crosses another, one of the crossing strings must be above the other, they do not join at a crossing. The curves that Menger studied were two dimensional. The students wondered whether three dimensional knots could also be inscribed in a sponge.

The knots problem required original thinking

Students are usually given exercise problems to solve. Even if they do not know the answer, they know that someone, presumably their teacher, does. Students may come up with wrong solutions, but they rarely have problems for which there is no known answer. These students had a reason to think that there might be a way to prove that knots could be inscribed in a sponge, but they did not know whether it could be proved. They could not just parrot a known solution. It was thought that knots could be embedded, but no one had yet proved that conjecture true.

The students chose a representation to use when solving the problem

Given that there was no known way to exactly solve this problem, the students had to select a representation for it. AI models are typically given a framework in which to solve a problem. For example, medical cases are described in a specific way, and the computer is provided with a finite number of potential diagnoses that may be considered. Language models are designed with tokens and network structures chosen by their designers. All full-process problem solvers need to be able to select the framework and units in which to solve the problem. In the case of current AI models, that selection is done by humans.

Here is an example problem. Here is a sequence of numbers, what digits follow in this sequence? 6842075 Hint: solving this problem will require finding an appropriate representation.

The students determined to represent the knots using an Arc Presentation in grid form. This means that they stretched and straightened the knots so that they consisted solely of horizontal and vertical lines. Topology allows such transformations because straightening the arcs preserves the pattern of connectedness of the knot, even as it changes their measurements. They decided that when two lines crossed, the vertical line would be represented as above the horizontal one.

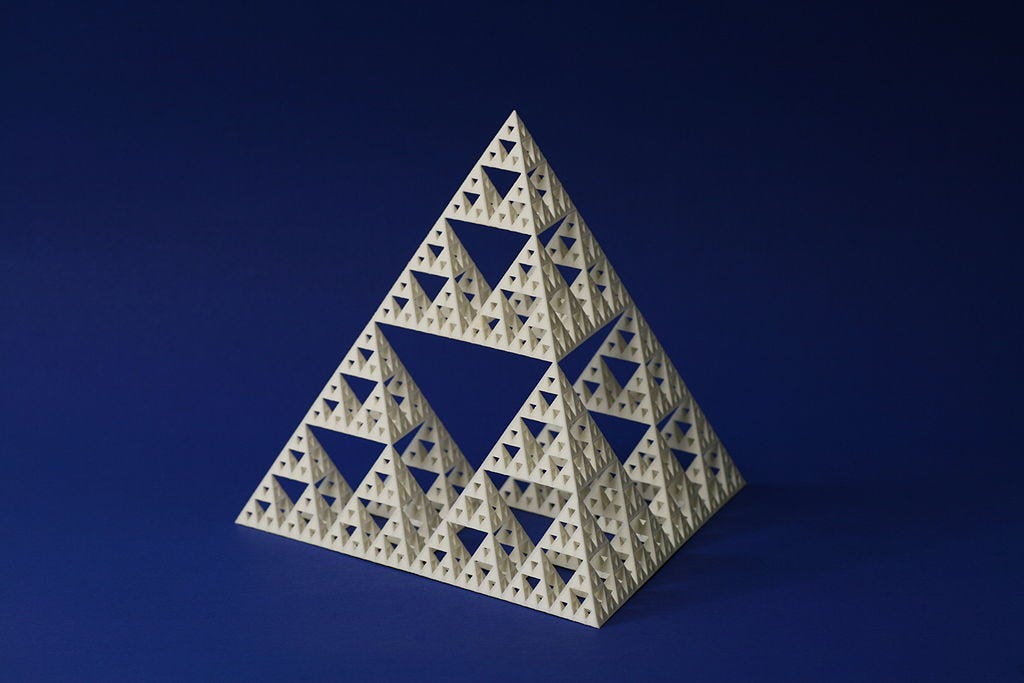

Also they selected a Cantor set as part of their representation. A Cantor set is a one-dimensional analog of a Menger sponge, constructed by iteratively removing the middle third of a line segment. The sponge is constructed by iteratively removing the middle cubelet from a cube. Another, related fractal is the Sierpinski carpet, which is a two dimensional fractal constructed by iteratively removing the middle squarelet from a square.

They recognized (and used) an analogy among the Cantor set, the Menger sponge, and the knots

They determined that those points on the sponge’s front face that were also in the Cantor set would not contain a hole and that there would also not be holes behind these points. The same would be true for the back face. They could therefore put the horizontal lines of the knot arcs on the front face and put the vertical lines on the back face and then connect them through the cube without encountering any holes.

The students used this analogy to solve the problem

They recognized that they needed to show that some topology-preserving transformation was available for each knot so that its corners would align with the Cantor set. The recognized that the Cantor set was analogous and they determined how to use that analogy to solve the problem. They could, for example, have used a Sierpinski carpet, which is also an analog of the Menger sponge, but they chose one over the over.

They derived a method to assess whether they had solved the problem.

They proved that they could, in fact, find transformations for each knot that would align it with the Cantor set and thereby proved that every knot could be embedded in a Menger sponge.

They also sought to determine whether the knots could be embedded in a Sierpinski tetrahedron, which is a pyramid-shaped form from which the middle is removed resulting in a stack of 4 tetrahedra, from which the middle is removed, and so on. The students were not able to prove that all knots could be embedded in this fractal, even though, like the cube, a tetrahedron is topologically equivalent to a sphere.

Conclusion

Today's GenAI models parrot known solutions to known problems. Humans can sometimes structure unknown problems, by identifying the analogies with known problems, for example, so that GenAI models can describe solutions. Then the intelligence comes from the human, not the model. General intelligence requires the skills shown by these students for it to be general. The inability of GenAI models to do so is not just a quantitative problem, but a structural one.

The fact that they were students who solved this problem is useful for responding to the idea that GenAI models are at least as smart as high school students. But more important for understanding artificial intelligence is that the team exhibited properties of intelligence that today’s GenAI models simply cannot demonstrate. Today’s AI models do not create their own approaches to solving problems, they do not derive their own structures, they do not consider how to use analogies, and so on. When GenAI models appear to be doing such things, they are parroting the language used by humans talking about existing solutions.

If similar enough training materials exist, GenAI models could describe the reasoning that previous humans engaged in to solve a problem like this, but they cannot choose a representation, discover analogies, or derive methods to evaluate their own success. These are part of the problem-solving process that is generally glossed over by those claiming that artificial general intelligence is imminent. AI models cannot become as intelligent as humans, and they certainly cannot become more intelligent than humans if they are dependent on people to provide major parts of their solution. For example, once the game of chess has been structured as a tree, computers are better than people at navigating that tree. They can beat the best human chess players. But without the insight that chess is a tree, chess computers would be little more than a complex thermostat. Faster computation and larger memories will not be sufficient to solve the entire problem. No amount of fill in the blanks training will allow GenAI models to achieve general intelligence. Different computational methods will be required, these have yet to be invented, and inventions are difficult to forecast.